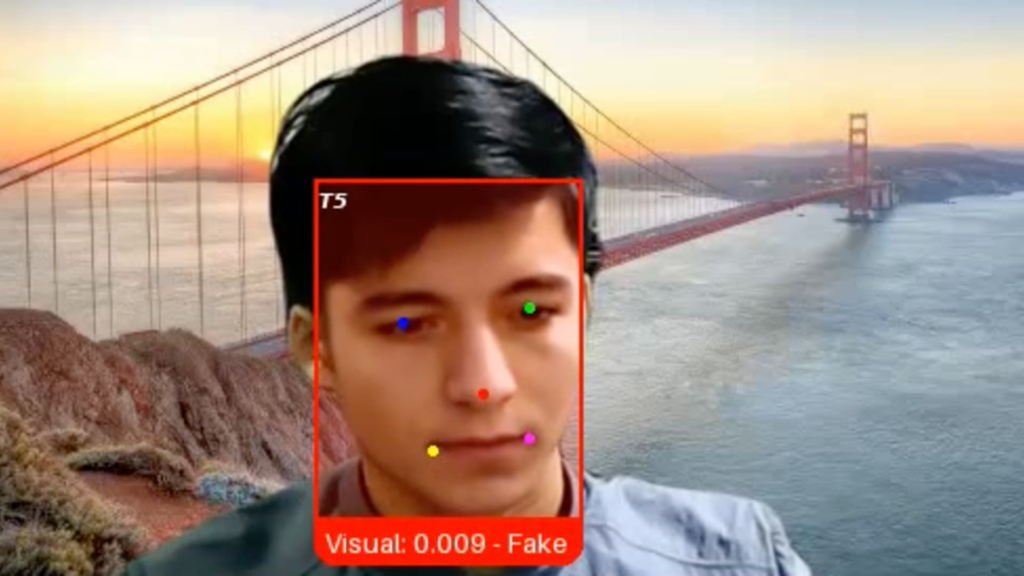

A picture offered by Pindrop Safety exhibits a pretend job candidate the corporate dubbed “Ivan X,” a scammer utilizing deepfake AI know-how to masks his face, in accordance with Pindrop CEO Vijay Balasubramaniyan.

Courtesy: Pindrop Safety

When voice authentication startup Pindrop Safety posted a latest job opening, one candidate stood out from a whole bunch of others.

The applicant, a Russian coder named Ivan, appeared to have all the precise {qualifications} for the senior engineering position. When he was interviewed over video final month, nonetheless, Pindrop’s recruiter observed that Ivan’s facial expressions had been barely out of sync together with his phrases.

That is as a result of the candidate, whom the agency has since dubbed “Ivan X,” was a scammer utilizing deepfake software program and different generative AI instruments in a bid to get employed by the tech firm, mentioned Pindrop CEO and co-founder Vijay Balasubramaniyan.

“Gen AI has blurred the road between what it’s to be human and what it means to be machine,” Balasubramaniyan mentioned. “What we’re seeing is that people are utilizing these pretend identities and pretend faces and pretend voices to safe employment, even generally going as far as doing a face swap with one other particular person who exhibits up for the job.”

Firms have lengthy fought off assaults from hackers hoping to use vulnerabilities of their software program, staff or distributors. Now, one other menace has emerged: Job candidates who aren’t who they are saying they’re, wielding AI instruments to manufacture picture IDs, generate employment histories and supply solutions throughout interviews.

The rise of AI-generated profiles signifies that by 2028 globally 1 in 4 job candidates will likely be pretend, in accordance with analysis and advisory agency Gartner.

The chance to an organization from bringing on a pretend job seeker can range, relying on the particular person’s intentions. As soon as employed, the impostor can set up malware to demand ransom from an organization, or steal its buyer knowledge, commerce secrets and techniques or funds, in accordance with Balasubramaniyan. In lots of instances, the deceitful staff are merely gathering a wage that they would not in any other case be capable of, he mentioned.

‘Large’ improve

Cybersecurity and cryptocurrency companies have seen a latest surge in pretend job seekers, trade specialists informed CNBC. As the businesses are sometimes hiring for distant roles, they current beneficial targets for unhealthy actors, these individuals mentioned.

Ben Sesser, the CEO of BrightHire, mentioned he first heard of the difficulty a 12 months in the past and that the variety of fraudulent job candidates has “ramped up massively” this 12 months. His firm helps greater than 300 company purchasers in finance, tech and well being care assess potential staff in video interviews.

“People are typically the weak hyperlink in cybersecurity, and the hiring course of is an inherently human course of with quite a lot of hand-offs and quite a lot of completely different individuals concerned,” Sesser mentioned. “It is develop into a weak level that people try to show.”

However the concern is not confined to the tech trade. Greater than 300 U.S. companies inadvertently employed impostors with ties to North Korea for IT work, together with a significant nationwide tv community, a protection producer, an automaker, and different Fortune 500 firms, the Justice Division alleged in Could.

The employees used stolen American identities to use for distant jobs and deployed distant networks and different strategies to masks their true areas, the DOJ mentioned. They in the end despatched thousands and thousands of {dollars} in wages to North Korea to assist fund the nation’s weapons program, the Justice Division alleged.

That case, involving a hoop of alleged enablers together with an American citizen, uncovered a small a part of what U.S. authorities have mentioned is a sprawling abroad community of 1000’s of IT employees with North Korean ties. The DOJ has since filed extra instances involving North Korean IT employees.

A development trade

Pretend job seekers aren’t letting up, if the expertise of Lili Infante, founder and chief government of CAT Labs, is any indication. Her Florida-based startup sits on the intersection of cybersecurity and cryptocurrency, making it particularly alluring to unhealthy actors.

“Each time we checklist a job posting, we get 100 North Korean spies making use of to it,” Infante mentioned. “Once you take a look at their resumes, they appear wonderful; they use all of the key phrases for what we’re in search of.”

Infante mentioned her agency leans on an identity-verification firm to weed out pretend candidates, a part of an rising sector that features companies resembling iDenfy, Jumio and Socure.

An FBI needed poster exhibits suspects the company mentioned are IT employees from North Korea, formally referred to as the Democratic Folks’s Republic of Korea.

Supply: FBI

The pretend worker trade has broadened past North Koreans lately to incorporate legal teams situated in Russia, China, Malaysia and South Korea, in accordance with Roger Grimes, a veteran pc safety guide.

Sarcastically, a few of these fraudulent employees could be thought of high performers at most firms, he mentioned.

“Typically they’re going to do the position poorly, after which generally they carry out it so nicely that I’ve truly had a couple of individuals inform me they had been sorry they needed to allow them to go,” Grimes mentioned.

His employer, the cybersecurity agency KnowBe4, mentioned in October that it inadvertently employed a North Korean software program engineer.

The employee used AI to change a inventory picture, mixed with a legitimate however stolen U.S. id, and bought by background checks, together with 4 video interviews, the agency mentioned. He was solely found after the corporate discovered suspicious exercise coming from his account.

Preventing deepfakes

Regardless of the DOJ case and some different publicized incidents, hiring managers at most firms are typically unaware of the dangers of faux job candidates, in accordance with BrightHire’s Sesser.

“They’re chargeable for expertise technique and different vital issues, however being on the entrance traces of safety has traditionally not been one in every of them,” he mentioned. “Of us suppose they don’t seem to be experiencing it, however I feel it is in all probability extra seemingly that they are simply not realizing that it is happening.”

As the standard of deepfake know-how improves, the difficulty will likely be tougher to keep away from, Sesser mentioned.

As for “Ivan X,” Pindrop’s Balasubramaniyan mentioned the startup used a brand new video authentication program it created to substantiate he was a deepfake fraud.

Whereas Ivan claimed to be situated in western Ukraine, his IP handle indicated he was truly from 1000’s of miles to the east, in a doable Russian army facility close to the North Korean border, the corporate mentioned.

Pindrop, backed by Andreessen Horowitz and Citi Ventures, was based greater than a decade in the past to detect fraud in voice interactions, however could quickly pivot to video authentication. Shoppers embody a few of the largest U.S. banks, insurers and well being firms.

“We’re not capable of belief our eyes and ears,” Balasubramaniyan mentioned. “With out know-how, you are worse off than a monkey with a random coin toss.”