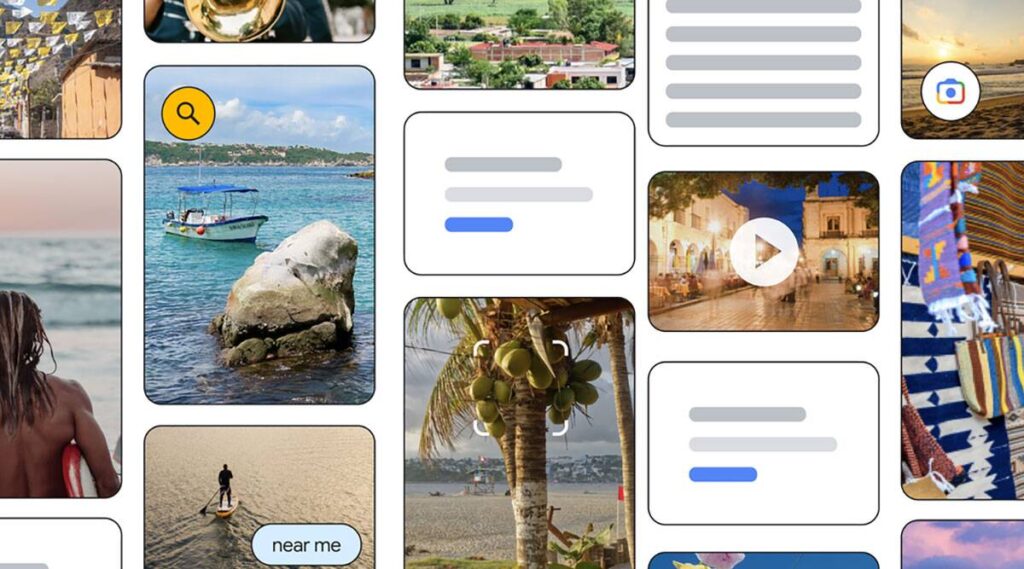

Google Search is getting a slew of latest options, the corporate introduced at its ‘Search On’ occasion, and lots of of those will guarantee richer and extra visually centered outcomes. “We’re going far past the search field to create search experiences that work extra like our minds– which might be as multi-dimensional as individuals. As we enter this new period of search, you’ll have the ability to discover precisely what you’re searching for by combining photographs, sounds, textual content and speech. We name this making Search extra pure and intuitive,” Prabhakar Raghavan, Google SVP of Search stated in the course of the keynote.

First, Google is increasing the multisearch function–which it launched in beta in April this yr– to English globally and it’ll come to 70 extra languages over the subsequent few months. The multisearch function let customers seek for a number of issues on the similar time, by combining each photographs and textual content. The function can be utilized together with Google Lens as nicely. Based on Google, customers depend on its Lens function almost eight billion instances a month to seek for what they see.

However by combining Lens with multisearch customers will have the ability to take an image of an merchandise after which use the phrase ‘close to me’ to seek out it close by. Google says this “new manner of looking will assist customers discover and join with native companies.” The “Multisearch close to me” will begin rolling out in English within the US later this fall.

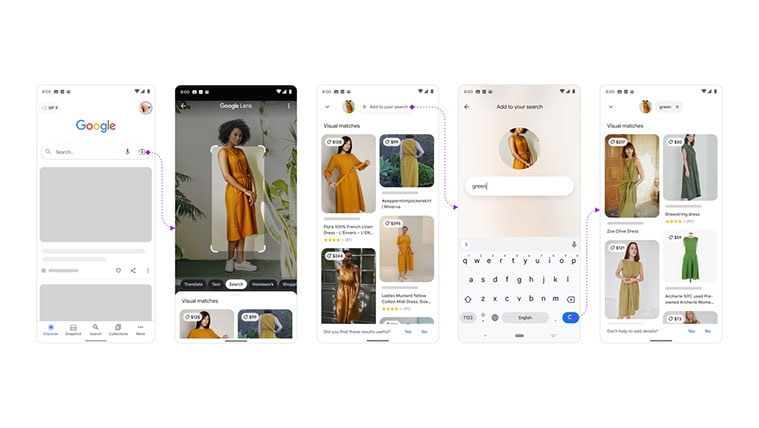

Google Search buying when used with the multisearch function. (Picture: Google)

Google Search buying when used with the multisearch function. (Picture: Google)

“That is made doable by an in-depth understanding of native locations and product stock. knowledgeable by the thousands and thousands of photographs and opinions on the internet,” Raghavan stated concerning multisearch and Lens.

Google is enhancing how translations will present over a picture. Based on the corporate, individuals use Google to translate textual content on photographs over 1 billion instances per thirty days, throughout greater than 100 languages. With the brand new function, Google will have the ability to “mix translated textual content into complicated photographs, so it seems and feels way more pure.” So the translated textual content will look extra seamless and part of the unique picture, as an alternative of the translated textual content standing out. Based on Google, it’s utilizing “generative adversarial networks (often known as GAN fashions), which is what helps energy the know-how behind Magic Eraser on Pixel,” to make sure this expertise. This function will roll out later this yr.

Additionally it is bettering its iOS app the place customers will have the ability to shortcuts proper underneath the search bar. This can assist customers store utilizing their screenshots, translate any textual content with their digital camera, discover a tune and extra.

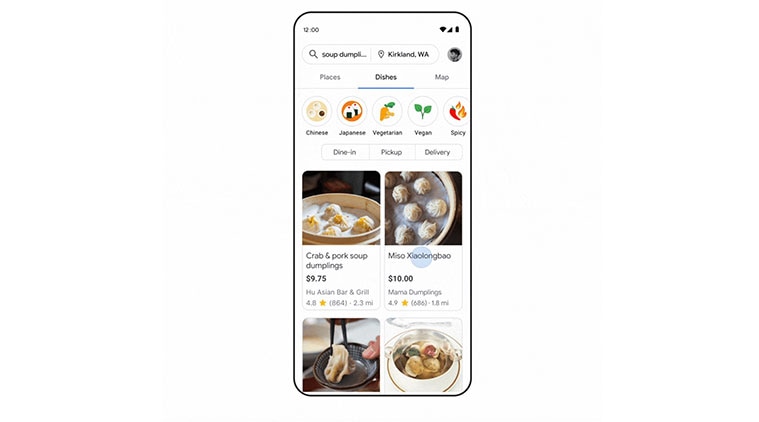

Meals ends in the revamped Google Search.

Meals ends in the revamped Google Search.

Google Search’s outcomes will even get extra visually wealthy when customers are looking for details about a spot or matter. Within the instance Google confirmed, when trying to find a metropolis in Mexico, the outcomes additionally show movies, photographs and different details about the place at hand all within the first set of outcomes itself. Google says it will guarantee a consumer doesn’t need to open a number of tabs when attempting to get extra details about a spot or a subject.

Within the coming month, it’ll additionally present extra related data, at the same time as a consumer begins to kind in a query. Google will present ‘key phrase or matter choices to assist” customers craft their questions. It’s going to additionally showcase content material from creators on the open net for a few of these subjects reminiscent of cities, and many others, together with journey ideas and many others. The “most related content material, from a wide range of sources, it doesn’t matter what format the knowledge is available in — whether or not that’s textual content, photographs or video,” will likely be proven, notes the corporate’s weblog put up. The brand new function will likely be rolled out within the coming months.

In terms of trying to find meals– and this could possibly be a specific dish or an merchandise at a restaurant, Google will present visually richer outcomes, together with photographs of the dish in query. Additionally it is increasing “protection of digital menus, and making them extra visually wealthy and dependable.”

Based on the corporate, it’s combining “menu data offered by individuals and retailers, and located on restaurant web sites that use open requirements for knowledge sharing,” and counting on its “picture and language understanding applied sciences, together with the Multitask Unified Mannequin,” to energy these new outcomes.

“These menus will showcase the most well-liked dishes and helpfully name out completely different dietary choices, beginning with vegetarian and vegan,” Google stated in a weblog put up.

It’s going to additionally tweak how buying outcomes seem on Search making them extra visible together with hyperlinks, in addition to letting them store for a ‘full look’. The search outcomes will even help 3D looking for sneakers the place customers will have the ability to view these explicit gadgets in 3D view.

Google Maps

Google Maps can also be getting some new options which can extra visible data, although most of those will likely be restricted to pick cities. For one, customers will have the ability to verify the ‘Neighbourhood vibe’ that means determine the locations to eat, the locations to go to, and many others, in a specific locality.

This can attraction to vacationers who will have the ability to use the knowledge to know a district higher. Google says it’s utilizing “AI with native information from Google Maps customers” to provide this data. Neighbourhood vibe begins rolling out globally within the coming months on Android and iOS.

Additionally it is increasing the immersive view function to let customers see 250 photorealistic aerial views of worldwide landmarks that span all the things from the Tokyo Tower to the Acropolis. Based on Google’s weblog put up, it’s utilizing “predictive modelling,” and that’s how immersive view routinely learns historic tendencies for a spot. The immersive view will roll out within the coming months in Los Angeles, London, New York, San Francisco and Tokyo on Android and iOS.

Customers will even have the ability to see useful data with the Reside View function. The search with Reside View function helps customers discover a place round them, say a market or a retailer whereas they’re strolling round. Search with Reside View will likely be made obtainable in London, Los Angeles, New York, San Francisco, Paris and Tokyo within the coming months on Android and iOS.

Additionally it is increasing its eco-friendly routing function– which launched earlier within the US, Canada, and Europe–to third-party builders by means of Google Maps Platform. Google is hoping that corporations in different industries reminiscent of supply or ridesharing providers — can have the choice to allow eco-friendly routing of their apps and measure gas consumption.