Be a part of high executives in San Francisco on July 11-12, to listen to how leaders are integrating and optimizing AI investments for achievement. Study Extra

Palo Alto, California-based Skyflow, an organization that makes it simpler for builders to embed information privateness into their purposes, at the moment introduced the launch of a “privateness vault” for giant language fashions.

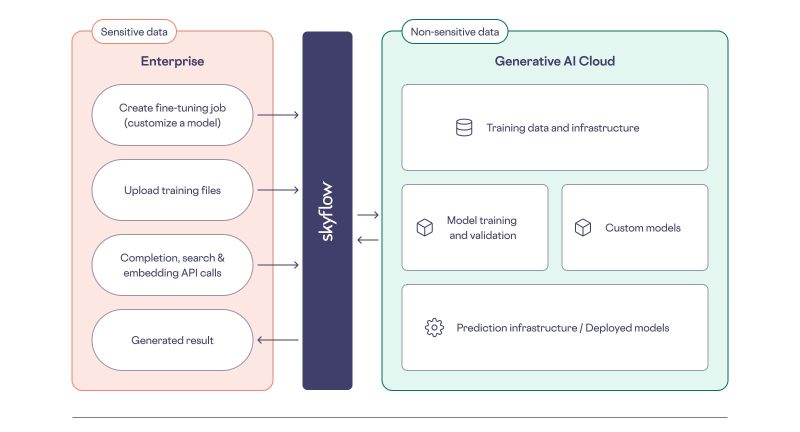

The answer, because the identify suggests, gives enterprises with a layer of information privateness and safety all through your complete lifecycle of their LLMs, starting with information assortment and persevering with by way of mannequin coaching and deployment.

It comes as enterprises throughout sectors proceed to race to embed LLMs, just like the GPT sequence of fashions, into their workflows to simplify processes and increase productiveness.

Why a privateness vault for GPT fashions?

LLMs are all the craze at the moment, serving to with issues like textual content era, picture era and summarization. Nonetheless, many of the fashions which can be on the market have been skilled on publicly obtainable information. This makes them appropriate for broader public use, however not a lot for the enterprise facet of issues.

Occasion

Rework 2023

Be a part of us in San Francisco on July 11-12, the place high executives will share how they’ve built-in and optimized AI investments for achievement and averted frequent pitfalls.

Register Now

To make LLMs work in particular enterprise settings, corporations want to coach them on their inner data. A couple of have already carried out it or are within the strategy of doing it, however the activity will not be straightforward, as you need to be certain that the inner, business-critical information used for coaching the mannequin is protected in any respect levels of the method.

That is precisely the place Skyflow’s GPT privateness vault is available in.

Delivered by way of API, the answer establishes a safe setting, permitting customers to outline their delicate information dictionary and have that info protected in any respect levels of the mannequin lifecycle: information assortment, preparation, mannequin coaching, interplay and deployment. As soon as totally built-in, the vault makes use of the dictionary and mechanically redacts or tokenizes the chosen info because it flows by way of GPT — with out lessening the worth of the output in any means.

“Skyflow’s proprietary polymorphic encryption method permits the mannequin to seamlessly deal with protected information as if it had been plaintext,” Anshu Sharma, Skyflow cofounder and CEO, advised VentureBeat. “It’ll defend all delicate information flowing into GPT fashions and solely reveal delicate info to approved events as soon as it has been processed by the mannequin and returned.”

For instance, Sharma defined, plaintext delicate information parts like electronic mail addresses and social safety numbers are swapped with Skyflow-managed tokens earlier than inputs are offered to GPTs. This info is protected by a number of layers of encryption and fine-grained entry management all through mannequin coaching, and in the end de-tokenized after the GPT mannequin returns its output. In consequence, approved finish customers get a seamless output expertise, with plaintext-sensitive information bypassing the GPT mannequin.

“This works as a result of GPT LLMs already break down inputs to research patterns and relationships between them after which make predictions about what comes subsequent within the sequence. So, tokenizing or redacting delicate information with Skyflow earlier than inputs are offered to the LLM doesn’t affect the standard of GPT LLM output — the patterns and relationships stay the identical as earlier than plaintext delicate information is tokenized by Skyflow,” Sharma added.

The providing may be built-in into an enterprise’s current information infrastructure. It additionally helps multi-party coaching, the place two or extra entities may share anonymized datasets and practice fashions to unlock insights.

A number of use circumstances

Whereas the Skyflow CEO didn’t share what number of corporations are utilizing the GPT privateness vault, he did notice that the providing, which is an extension of the corporate’s current privacy-focused options, helps defend delicate scientific trial information within the drug growth cycle in addition to buyer information utilized by journey platforms for bettering buyer experiences.

IBM too is a buyer of Skyflow and has been utilizing the corporate’s merchandise to de-identify delicate info in giant datasets earlier than analyzing it by way of AI/ML.

Notably, there are additionally various approaches to the issue of privateness, resembling creating a personal cloud setting for working particular person fashions or a personal occasion of ChatGPT. However these may show to be far dearer than Skyflow’s answer.

Presently, within the information privateness and encryption area, the corporate competes with gamers like Immuta, Securiti, Vaultree, Privitar and Foundation Principle.